Kubernetes Clusters, Simplified!

Kubernetes Clusters, Simplified!

Today we don’t need an introduction to Kubernetes, most of the distributed and largescale engineering deployments on cloud use microservices architectures and deploy containerized workloads on Kubernetes. The challenge is not one size fits all, thus sizing of the cluster and resource mapping is a tight rope walk unless one understands the underlying factors.

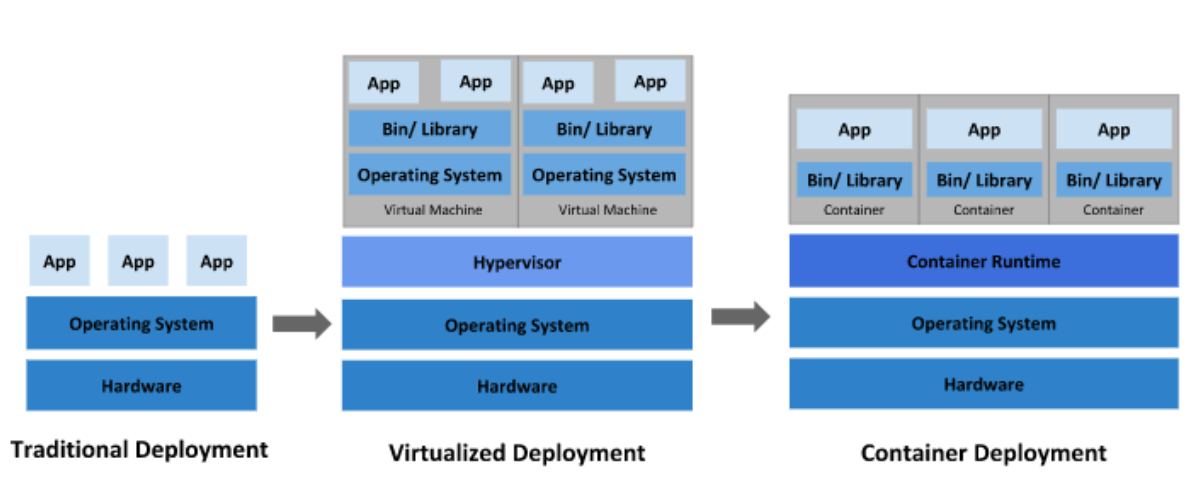

Going back few decades, applications were run on physical servers limited by the resources available one that piece of hardware, to mitigate this issue virtualization was adopted that provided hypervisors to optimize the resources and provide scalability across multiple servers ties as logical cluster. Hypervisors added a layer between the applications and hardware providing isolation of different applications running in virtual instances from each other and each virtual instance can be added or removed without impacting other instances running on the same host. This was a novel and noble idea till the time there was a need to run microservices that provided granular isolation that that of virtual instances running on hypervisors.

A microservice architecture promoted development and deployment of applications composed of independent, autonomous, modular, self-contained units, commonly called as containers. Containers bundle the application code with all the Operating System binaries and libraries that are necessary to run the application. The agility provided by containers was quickly recognized since their portability and consistency of running no matter which type of operating system is hosting them. Containerized applications have ability to scale and because containers can be added, updated or subtracted quickly and each module of the application workflow can independently scale on demand and solved the software delivery and deployment problems. Containers like Docker, became popular because they provide an optimal abstraction that allows developers to build modules of code that can be deployed consistently across many platforms and environments.

The need for container orchestration is a logical next step for the developer community while adopting containers. The continued success and battle testing by Google toward microservice architecture and affinity with containers is steering microservices adoption toward Kubernetes as their default platform. Introduction of kubernetes solved two key issues for developers about managing their cluster and containers via abstracting compute, storage, and networks away from their physical implementation and deploying containers to clusters running the code. Additionally, helped in maintaining policies and standards for production deployments enforcing all resources ( Read Pods, Configurations, Secrets, Deployments, Volumes, etc ) be expressed via YAML file validating what and how things get deployed and gained granular control on the scalability, performance, monitoring and self-healing abilities.

Kubernetes provides:

- Service discovery and load balancing Kubernetes can expose a container using the DNS name or using their own IP address. If traffic to a container is high, Kubernetes is able to load balance and distribute the network traffic so that the deployment is stable.

- Storage orchestration Kubernetes allows you to automatically mount a storage system of your choice, such as local storages, public cloud providers, and more.

- Automated rollouts and rollbacks You can describe the desired state for your deployed containers using Kubernetes, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers and adopt all their resources to the new container.

- Automatic bin packingYou provide Kubernetes with a cluster of nodes that it can use to run containerized tasks. You tell Kubernetes how much CPU and memory (RAM) each container needs. Kubernetes can fit containers onto your nodes to make the best use of your resources.

- Self-healing Kubernetes restarts containers that fail, replaces containers, kills containers that don’t respond to your user-defined health check, and doesn’t advertise them to clients until they are ready to serve.

- Secret and configuration managementKubernetes lets you store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. You can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

The Kubernetes environment is centered around a few core concepts and components that needs to be understood..

- Clusters - A Kubernetes cluster represents a compute, network, and storage, It can range in size and must scale, the choice on cluster size will be dependent on physical or virtual resources available as baseline. Clusters can be run directly on bare metal, nested within hypervisors, or also nested within containers.

- Pods are a group of microservices that are run in a shared context. Pods are the abstraction of this layer to provide a logical host that is application grouped rather than host-based.

- Replication Controllers - The replication controllers ensure that a certain number of replicas of the pod are running at any one time. In the event of a replica failing, another will be spun up automatically in its place to keep the pre-defined number of replicas active.

- Labels - The labels are key-value pairs assigned to tag objects such as pods within Kubernetes. This applies to be able to label and select objects using references that will be meaningful to the application environment and can be referenced in the code as well.

- Kubernetes services - Service discovery is an important part of Kubernetes and using services to provide names, addresses to pods using labels. Policies also come into play within the services.

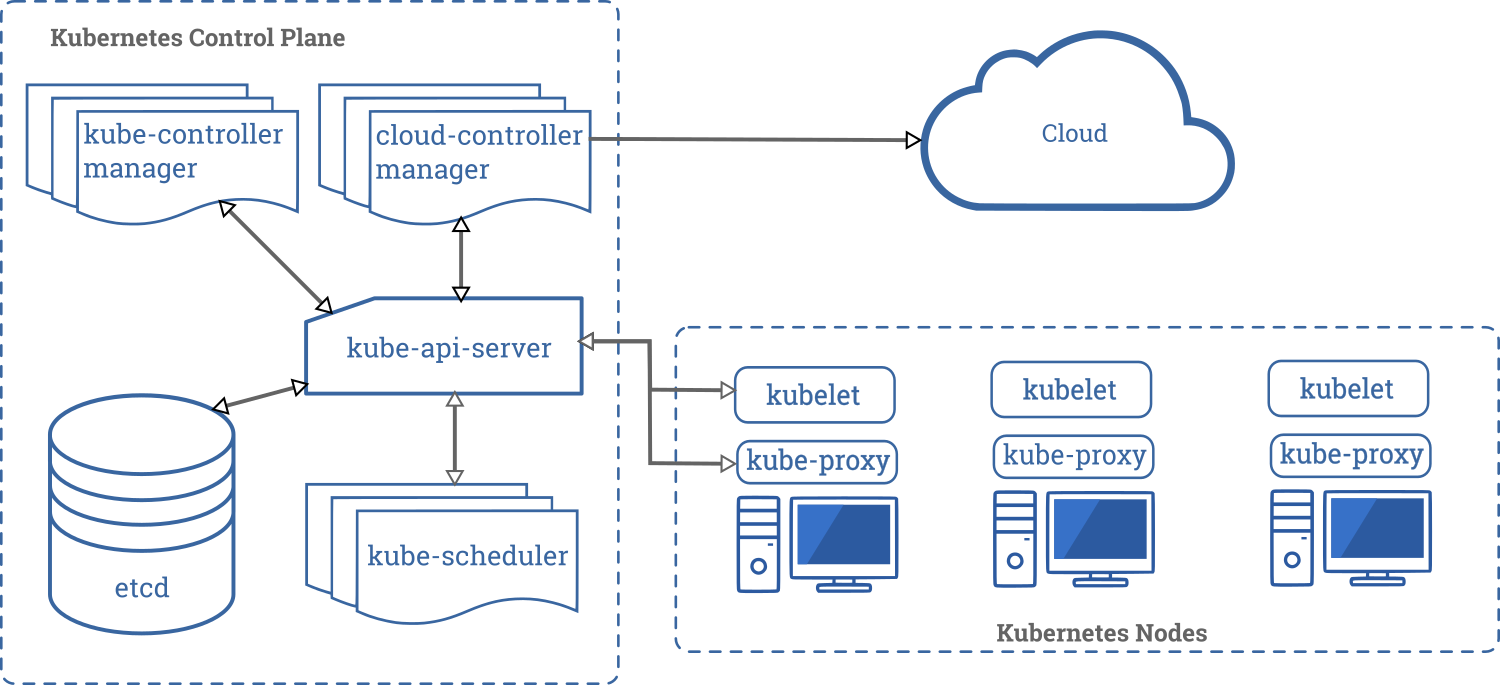

A Kubernetes cluster typically consists of two types of nodes, each responsible for different aspects of functionality:

- Master nodes – These nodes host the control plane aspects of the cluster and are responsible for, among other things, the API endpoint which the users interact with and provide scheduling for pods across resources. Typically, these nodes are not used to schedule application workloads. Master nodes are critical to the operation of the cluster, If no masters are running, or the master nodes are unable to reach a quorum, then the cluster is unable to schedule and execute applications. The master nodes are the control plane for the cluster and consequentially there should be special consideration given to their sizing and quantity.

- Compute nodes – Nodes which are responsible for executing workloads for the cluster users. The cluster has a number of Kubernetes intrinsic services which are deployed in the cluster. Depending on the service type, each service is deployed on only one type of node (master or compute) or on a mixture of node types. Some of these services, such as etcd and DNS, are mandatory for the cluster to be functional, while other services are optional. All of these services are deployed as pods within Kubernetes. Compute nodes are, generally speaking, much more disposable. However, extra resources must be built into the compute infrastructure to accommodate any workloads from failed nodes. Compute nodes can be added and removed from the cluster as needed quickly and easily to accommodate the scale of the applications which are being hosted. This makes it very easy to burst, and reclaim, resources based on real-time application workload.

- etcd – etcd is a distributed key-value datastore. It is used heavily by Kubernetes to track the state and manage the resources associated with the cluster.

- DNS – Kubernetes maintains an internal DNS service to provide local resolution for the applications which have been deployed. This enables inter-pod communication to happen while referencing friendly names instead of internal IP addresses which can change as the container instances are scheduled.

- API Server - Kubernetes deploys the API server to allow interaction between kubernetes and the outside world. This is deployed on the master node(s).

- The dashboard – an optional component which provides a graphical interface to the cluster.

- Monitoring and logging – optional components which can aid with resource reporting.

Sizing Considerations

The size of the cluster (in terms of the number of nodes) shapes both performance and availability in critical ways, having more nodes in the cluster will provide more resources available for the cluster to consume and operate upon thus upside for performance. There is no silver bullet on cluster sizing, while there are tailormade options suggested by many providers, you must evaluate the following drivers to initiate the sizing process and keep finetuning as you get visibility parsing multiple considerations, always having 10% buffer will help calibrate the design. Other factors beyond node count do shape performance and availability such as resource allocations among pods and namespaces, network quality, the reliability of your underlying infrastructure and the locale of within the network also impact performance and availability significantly without doubt. Let us go though some high level attributes and parameters of the key tenets of the cluster.

- Master Nodes - Sizing for Master nodes is calculated based on the number of pods the cluster is expected to host. Typically bigger master nodes ( CPU & memory footprint ) for number of PODs and Worker Nodes, thus 1 CPU core and 1.5 GB of memory for each 1000 pods is good start since master caches deserialized versions of resources aggressively to ease CPU load.

- Compute Nodes - Number of nodes & overall environment size depends on knowing the total amount of resources your workloads will consume will help to dictate the amount of capacity loss your environment can handle, i.e. workload profiles dictate the size and type of compute nodes in the total workload landscape. The core to memory ratio for computational workloads may demand 1 core to 2GB memory. Here are the tenets you should be aware..

- Maximum nodes per cluster

- Maximum Pods per Cluster

- Maximum Pods per Node

- Maximum Pods per Core – Depends on the workload footprint

- Node Characteristics – it is advisable to build the cluster with smaller number of nodes to reduce blast radius for better manageability and isolation in case of issues. Using multiple identical clusters can help isolate issues yet ensure scalability. If your namespaces in a single cluster exceed six, break it into smaller clusters for easier manageability. Considering which apps you would like to run in which cluster might be a good input to arrive at the cluster size and number of nodes per cluster. While you have resolved that you will have multiple clusters, keep tab on the costs since for high availability of the control plane, each cluster will now need multiple master nodes (usually three) thus count of master nodes will increase escalating the cost. The form factor of the node plays important role in the cluster design, A physical node vs virtualized node might also have a bearing on the resources it may have and the performance it offers thus mix and match of different node can help scheduler take advantage of the underlying resources and redistribute the workloads for balancing the availability, performance thus expected outcomes.

- Type of Workloads – depends upon the Size & Type of Cluster – Stateful or Stateless workloads, number of namespaces etc. Since each namespace adds management overhead and also increases the challenge of the “noisy neighbour” issues. Although Kubernetes namespaces allows to divide clusters into isolated zones for individual workloads (or groups of workloads), you might still be better off simply breaking your cluster into smaller clusters than trying to add more namespaces.

- Network Design - A shared network must exist between the master and node hosts you’re your cluster is designed to have multiple masters for high-availability you must also select an IP to be configured as your virtual IP (VIP) during the installation process, all and all adequate bandwidth by and between components since everything depends on the API calls running the cluster.

Kubernetes is inherently scalable. It has a number of tools that allow both applications as well as the infrastructure they are hosted on to scale in and out based on demand, efficiency and a number of other metrics. There are quite a few considerations you might need to take into account to size the cluster and use it too! ( pun intended), to get you going!

Adoption of Kubernetes, Regardless of the architecture that you choose, it’s important to understand the ramifications to high availability, scalability, and serviceability of the component services. Containers in the enterprise are becoming mainstream and investments are increasing, but expertise is limited, and challenges are mounting as containers enter production. Smart companies are building skills internally, looking for partners that can help catalyse success, and choosing more integrated solutions that accelerate deployments and simplify the container environment. In many cases, containers are deployed on top of a hypervisor largely because organizations initially lack the tools and processes to run containers on bare-metal servers. The Cloud Native Compute Foundation (CNCF) created The Certified Kubernetes Administrator (CKA) program in collaboration with The Linux Foundation, to help further develop the Kubernetes ecosystem. As one of the highest velocity open source projects, Kubernetes use is exploding…

In summary.. One of the current trends is to break up monolithic applications and turn them into microservices. Google, Netflix, and LinkedIn are few front running examples who’ve successfully adopted a microservices strategy that enhanced efficiency, while increasing reliability and scalability of their global application footprints. Running small components within containers and having them deployed and redeployed quickly using Kubernetes turns out to be a great competitive advantage for them. One of the main challenges developers will face in the future is how to focus more on the details of the code rather than the infrastructure where that code runs on. For that, serverless is emerging as one of the leading architectural paradigms to address that challenge. There are already very advanced frameworks such as Knative and OpenFaas that use Kubernetes to abstract the infrastructure from the developer and are leading that path, for the future of distributed applications!

***

June 2020. Compilation from various publicly available internet sources, authors views are personal.